https://link-springer-com.ezproxy.ub.gu.se/article/10.1007/s10664-020-09826-7

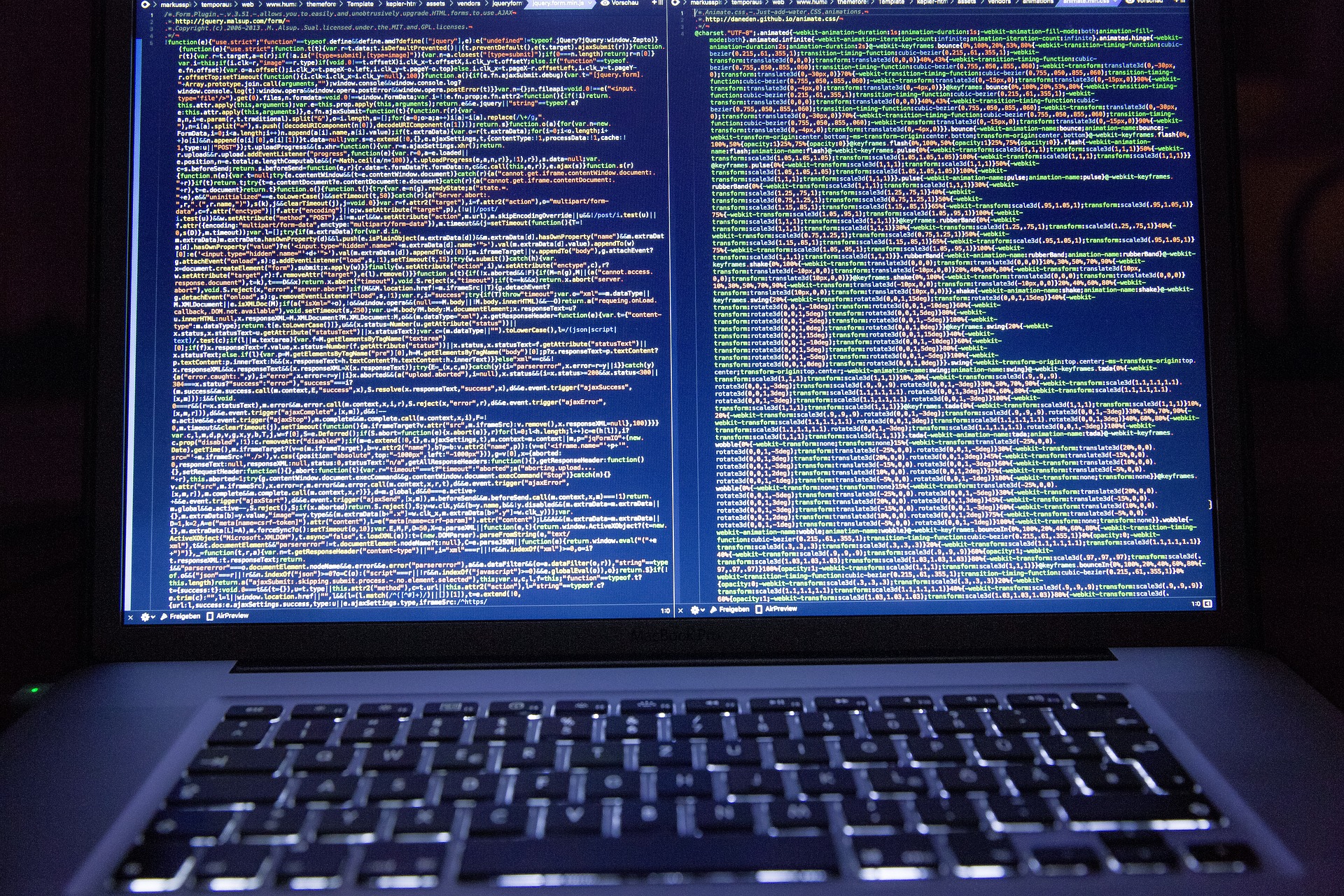

Recently, I’ve read an article in Empirical Software Engineering about automated code refactoring. I must admit that I do refactoring quite seldom. It’s a tedious task and for the software that I write, quite unnecessary. My software is often a set of scripts to solve a specific task and then the key is to document it, not refactor. A good documentation helps me to understand what I did in that code and how it works. Yes, I know it sounds like a cliché, but that’s how it is for me. I’m switching tasks so often that I forget what the code was doing.

Nevertheless, I recognize the code that is nicely written, formatted and refactored. Therefore, I was on a lookout for a tool that could do something like that for me – suggest a refactoring that I could implement.

So, this is a paper that I found, which I would like to try out. It is a tool that was evaluated through interviews with designers and developers. Although they can recognize that the code was refactored, but they seemed to be happy about it. So, I’m off to try out the tool:)

Abstract: Refactoring is a maintenance activity that aims to improve design quality while preserving the behavior of a system. Several (semi)automated approaches have been proposed to support developers in this maintenance activity, based on the correction of anti-patterns, which are “poor” solutions to recurring design problems. However, little quantitative evidence exists about the impact of automatically refactored code on program comprehension, and in which context automated refactoring can be as effective as manual refactoring. Leveraging RePOR, an automated refactoring approach based on partial order reduction techniques, we performed an empirical study to investigate whether automated refactoring code structure affects the understandability of systems during comprehension tasks. (1) We surveyed 80 developers, asking them to identify from a set of 20 refactoring changes if they were generated by developers or by a tool, and to rate the refactoring changes according to their design quality; (2) we asked 30 developers to complete code comprehension tasks on 10 systems that were refactored by either a freelancer or an automated refactoring tool. To make comparison fair, for a subset of refactoring actions that introduce new code entities, only synthetic identifiers were presented to practitioners. We measured developers’ performance using the NASA task load index for their effort, the time that they spent performing the tasks, and their percentages of correct answers. Our findings, despite current technology limitations, show that it is reasonable to expect a refactoring tools to match developer code. Indeed, results show that for 3 out of the 5 anti-pattern types studied, developers could not recognize the origin of the refactoring (i.e., whether it was performed by a human or an automatic tool). We also observed that developers do not prefer human refactorings over automated refactorings, except when refactoring Blob classes; and that there is no statistically significant difference between the impact on code understandability of human refactorings and automated refactorings. We conclude that automated refactorings can be as effective as manual refactorings. However, for complex anti-patterns types like the Blob, the perceived quality achieved by developers is slightly higher.