https://dl.acm.org/doi/pdf/10.1145/3660806

I have written a lot about code reviews. Not because this is my favourite activity, but because I think that there is a need for improvement there. Reading others’ code is not as fun as we may think and therefore making it a bit more interesting is much desirable.

This paper caught my attention because of the practical focus of it. In particular, the abstract caught my attention – they claim that changing the order of files presented for review makes a lot of difference. Up to 23% more comments are written when the files are arranged in the right order. Not only that, the quality of the comments seems to increase too. More tips:

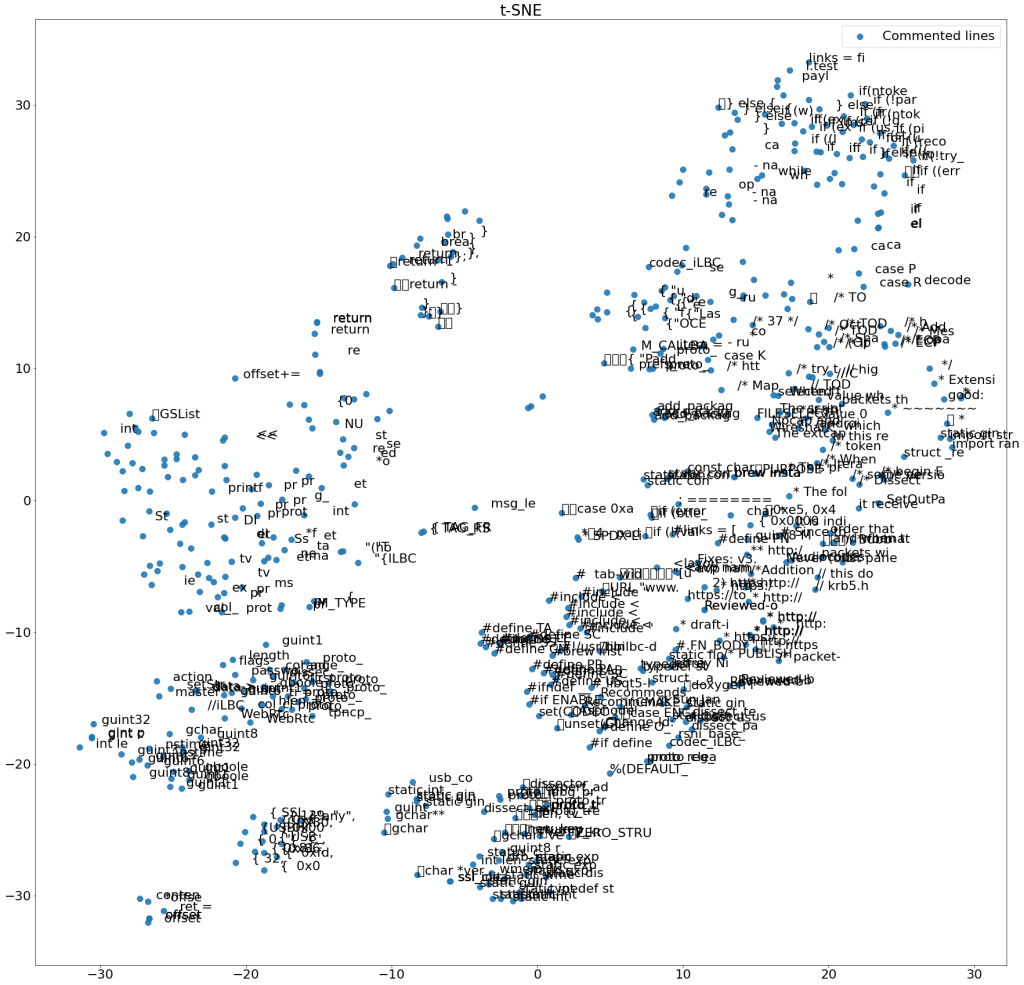

- Re-order Files Based on Hot-Spot Prediction: The study found that reordering the files changed by a patch to prioritize hot-spots—files that are likely to require comments or revisions—improves the quality of code reviews. Implementing a system that automatically reorders files based on predicted hot-spots could make the review process more efficient, as it leads to more targeted comments and a better focus on critical areas.

- Focus on Size-Based Features: The study highlighted that size-based features (like the number of lines added or removed) are the most important when predicting review activities. Emphasizing these features when prioritizing files or creating models for review could further streamline the process.

- Utilize Large Language Models (LLMs): LLMs, such as those used for encoding text, have shown potential in capturing the essence of code changes more effectively than simpler models like Bag-of-Words. Incorporating LLMs into the review tools could improve the detection of complex or nuanced issues in the code.

- Automate Hot-Spot Detection and Highlighting: The positive impact of automatically identifying and prioritizing hot-spots suggests that integrating such automation into existing code review tools could significantly enhance the efficiency and effectiveness of the review process.

Sounds like this is one of the examples where we can see the large benefits of using LLMs in code reviews. I hope that this will make it into more companies than Ubisoft (partner on that paper).

I’ve asked ChatGPT to provide me an example of how to create such a hotspot model and it seems that this can be implemented in practice very easily. I will not paste it here, but please try for yourself.