A Probabilistic Framework for Mutation Testing in Deep Neural Networks (arxiv.org)

Testing of neural networks is still an open problem. Due to the complexity of their connections, and their probabilistic nature, it is difficult to find defects. Although there is a lot of approaches, e.g., using autoencoders or using surprise adequacy measures, testing of neural networks is something of a mistery for me.

I could say that the topic was under my radar for a while. I actually though that there is not much need for testing research in software engineering; even though I run two projects with the testing components. For one, I thought that deep learning is basically like a “rabbit hole” – the more you test it, the more interesting properties you discover. I’ve tried to use testing to understand what kind of things the models learn, but I’m not sure that this is the right approach. I’m affraid that this will never be the case – the deep learning models learn something, we can evaluate it, but we can never really fully understand what the models has learned.

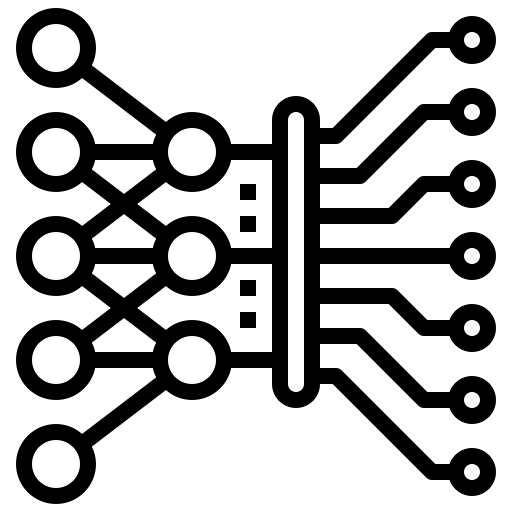

Now, this article uses mutation testing for the purpose to find the best test suite to validate the models. Well, it does more than that. It offers a framework where we can use three different models to evaluate the mutants and choose the ones that are expected to provide the best results. It is built on top of frameworks/models like DeepCrime (link here) and can provide a better selection approach. So far, the framework has been evaluated on the standard dataset – MNIST – but I hope that it will be expanded on other datasets in the future.